Warm-up: Central limit theorem.

Write a code centrallim.py to illustrate the central limit theorem. With a set of M=[1,2,5,16] independent variates x_i (i=1..M), uniformly distributed over [-1,1], generate N=1000000 samples of x=(x_1+x_2+..x_M)/sqrt(M). Estimate sigma, the std deviation of x, and also the kurtosis k4=(<x^4/sigma^4-3> assuming a zero mean). Have your code print a table, as in

# M sigma kurtosis

#______________________

#

1 ##### ######

2 ##### ######

If it is helpful, formatted printing might be done with

print('{0:2d} {1:9.6f} {2:9.6f}'.format(m,sig,k4))

Also have your code generate a plot, centrallim.pdf, showing superimposed histograms for all four values of M. If you use matplotlib's hist() function, please use histtype='step' and density=True for easy comparison.

[The takeaway is the convergence of non-Gaussian processes converge "normality" as predicted by the Central Limit Theorem. I played around with various other starting distributions like x=u^3 and (of course) x=random.normal() to get a sense of the effect of finite sample size....]

The hypervelocity star LAMOST-HVS1 (discovered by Prof. Zheng and collaborators! see Zheng et al. 2014) is moving so fast that it will escape from our Galaxy. The Gaia astrometry mission also observed this star (designated as Gaia DR2 590511484409775360). From the Gaia observations, this star moves across the sky at an angular velocity of

The units are milli-arcseconds (mas) per year, where 1 mas=4.8481368e-9 radians; "x" and "y" refer to horizontal and vertical directions of travel across a tiny patch of sky. (Details for astronomers: x is along right ascension and y is declination). The uncertainties listed are "1-sigma errors" (68% confidence range) and the error distributions of these measurements are well-approximated as Gaussian.mu_x = -3.537 +/- 0.110 (mas/year [RA dir], 1-sigma error) mu_y = -0.620 +/- 0.093 (mas/year [dec dir]) rho = -0.2516 (correlation coefficient of mu_x and mu_y)

In a python script called lamost_hvs1.py, use Monte Carlo trials to estimate the angular speed, mu, in (mas/year), reporting the median speed with upper and lower error bounds that define a 68% confidence region (lower bound, upper bound are 16th-percentile and 84th-percentile of the possible mu values). Note: in astronomy this angular movement is known as "proper motion".

Hint: if you choose to use the emcee package (see info at this informal link and specifically this link with info for this problem) you might be able to easily adopt your code to the next exercise.

Another star, Gaia DR2 1540013339194597376, was recently identified (see Table 3 of this article) as being a potential hypervelocity star (unbound to the Galaxy, like LAMOST-HVS1). The distance to this star from us is estimated using its parallax, the change in the star's position in the sky from the Earth's orbit around the Sun. This star's parallax is

pax = 0.5894 +/- 0.0528 maswhere the uncertainty is sigma, the std dev of a Gaussian error distribution. If parallax were known perfectly, it would give the star's distance from the Sun as dist=1/pax, in units of kiloparsecs (kpc, 3.08e19 m) when pax is in mas (see previous exercise). The angular drift speed of this star (a.k.a., its proper motion) is mu=143.9 mas/year with errors below0.1 mas/year, which you may ignore here.

In a MCMC code called faststar.py, find the speed v_sky=mu*dist of this star in the plane of the sky, perpendicular to the line of sight between the star and the Sun. Report your result, and 68.3% confidence limits (16th and 84th percentiles), in units of km/s. Do this calculation in two ways:

p(dist|pax)~exp(-(pax-1/dist)^2/(2*sigma^2))*|dpax/ddist|where pax is the measured distance and |dpax/ddist| is a Jacobian. This is a minimalist approach in that we assert only the conservation of probability in a change of variables [p(y)dy=p(x)dx]. [Note: The Jacobian is not needed if the mcmc sample variable is itself z=1/pax, and if the samples are converted to distance d=1/z].

p(dist|pax)~exp(-(pax-1/dist)^2/(2*sigma^2))*[dist^2*exp(-dist/lambda)]The term in the "[]" is the prior, and it reflects an expectation that stars in the Gaia survey most likely lie close to the Sun. Set lambda=0.1 kpc, meaning that we expect most stars to be within a kpc of the Sun.

Hints/comments:

The time series in files

~bromley/courses/ap7730/data/GW150914_H.dathave the strain signal from the first binary black hole merger event, GW150914, reported by LIGO. The first file is from the Hanford (WA) and Livingston (LA) detectors. These are text files with a single column of data, samples of the strain taken at a rate of 4096 HZ, spanning 32 seconds, and starting at a time specified in the file in a common time coordinate system. These files were downloaded from. this link

~bromley/courses/ap7730/data/GW150914_L.dat

The processing that the LIGO team uses to extract the signal is elegant. But the signal can at least be verified using a simple procedure involving a passband filter based on the power spectral density of the two data streams. The exercise here is to build this simple filter to extract the strain signal in the two time series.

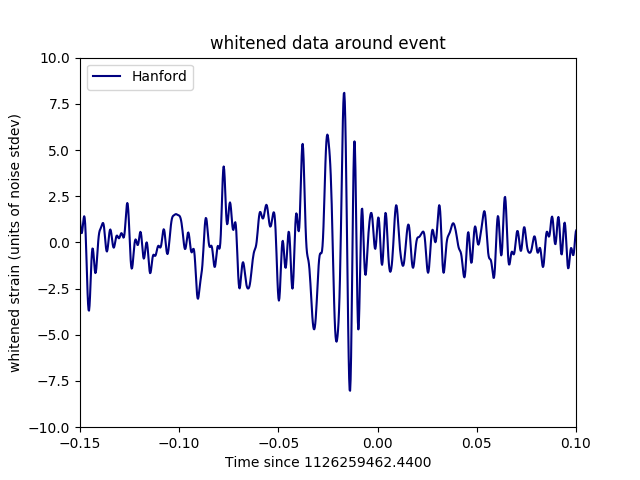

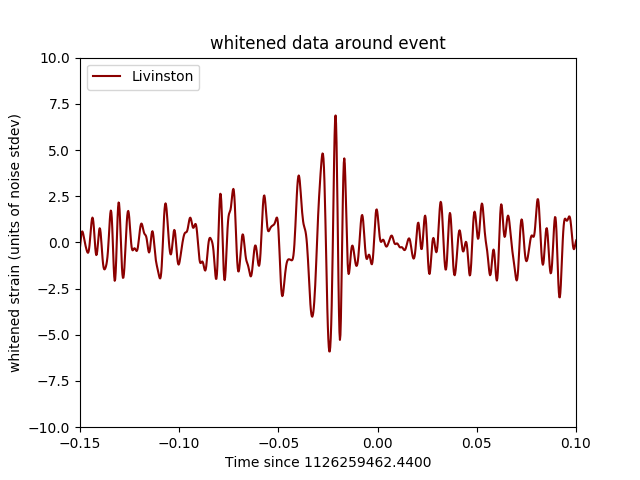

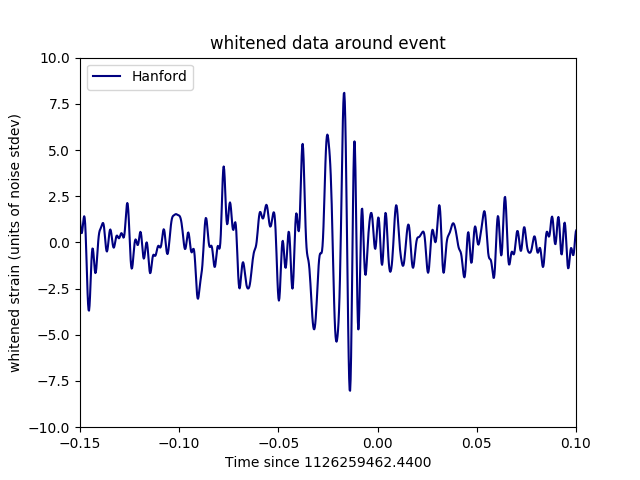

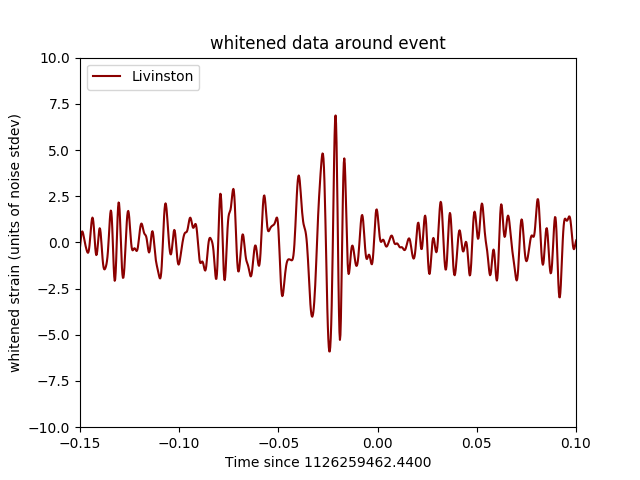

Write a script, gravwave.py, that does the filtering and generates a plot, gravwave.pdf, showing the strain in a 0.3 second time interval bracketing the event for both the Hanford and Livingston data (on trhe same plot). In the time coordinates correspondng to the data in the files, the time of the event is t=1126259462.44 seconds. In your plot, show time such that the event is at t=0 on the horizontal axis, and the signal strength in the vertical axis is in units of noise std dev (referring to the standard deviation of the filtered strain samples). These images show the LIGO team's resultsHere, you are after something similiar, not in exact wave form but in signal strength.

Details and hints.

Once you have your code working, there are lots of cool things to try. Examples include cross-correlating the two signals to figure out the lag, fitting wave forms, trying to pluck out the sinusoidal-ish signal before the chirp... In creating this problem, I worked from this website, very cool, shows the right way of approaching the data management and signal processing....Enjoy!